Regulating Automated Vehicles with Human Drivers

Summary

Regulatory oversight of automated vehicle operation on public roads is being gamed by the vehicle automation industry via two approaches: (1) promoting SAE J3016, which is explicitly not a safety standard, as the basis for safety regulation, and (2) using the “Level 2 loophole” to deploy autonomous test platforms while evading regulatory oversight. Regulators are coming to understand they need to do something to reign in the reckless driving and other safety issues that are putting their constituents at risk. We propose a regulatory approach to deal with this situation that involves a clear distinction between production “cruise control” style automation that can be subject to conventional regulatory oversight vs. test platforms that should be regulated via SAE J3018 use for testing operational safety.

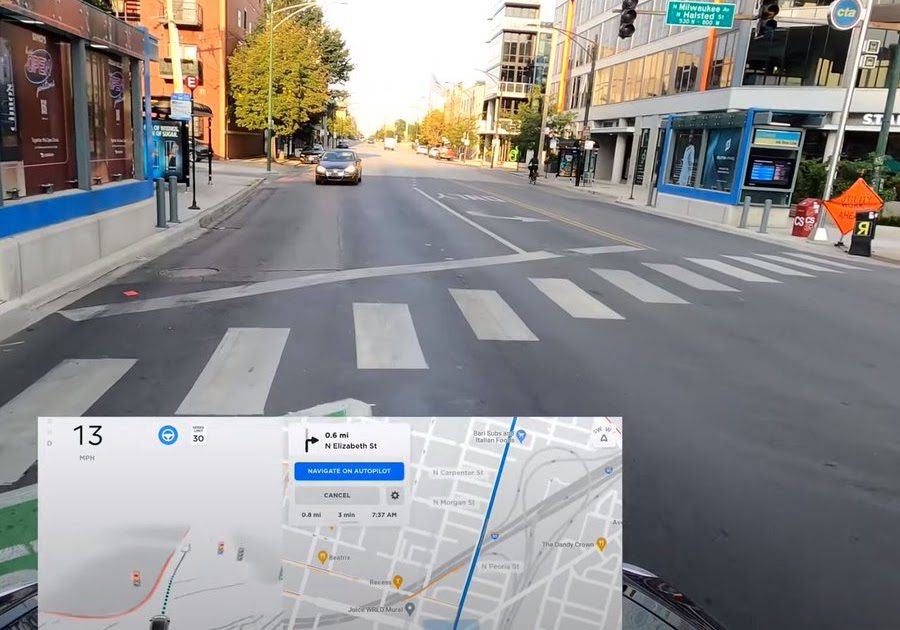

Video showing Tesla FSD beta tester unsafely turning into oncoming traffic.

Do not use SAE J3016 in regulations

The SAE J3016 standards document has been promoted by the automotive industry for use in regulations, and in fact is the basis for regulations and policies at the US federal, state, and municipal levels. However, it is fundamentally unsuitable for the job. The issues with using SAE J3016 for regulations are many, so we provide a brief summary. (More detail can be found in Section V.A of our SSRN paper.)

SAE J3016 contains two different types of information. The first is a definition of terminology for automated vehicles, which is not really the problem, and in general could be suitable for regulatory use. The second is a definition of the infamous SAE Levels, which are highly problematic for at least the following reasons:

In practice, one of two big issues is the “Level 2 Loophole” in which a company might claim that the fact there is a safety driver makes its system Level 2, while insisting it does not intend to ever release that same automated driving feature as a higher level feature. This could be readily gamed by, for example, saying that Feature X, which is in fact a prototype fully automated driving system, is Level 2 at first. When the company feels that the prototype is fully mature, it could simply rebrand it Feature Y, slap on a Level 4 designation, and proceed to sell that feature without ever having applied for a Level 4 testing permit. We argue that this is essentially what Tesla is doing with its FSD “beta” program that has, among other things, yielded numerous social media videos of reckless driving despite claims that its elite “beta test” drivers are selected to be safe (e.g., failure to stop at stop signs, failure to stop at red traffic signals, driving in opposing direction traffic lanes).

The second big practical issue is that J3016 is not intended to be a safety standard, but is being used as such in regulations. This is making regulations more complex than they need to be, stretching the limits of the esoteric technical expertise in AVs required of regulatory agencies, especially for municipalities. This is combined with the AV industry promoting a series of myths as part of a campaign to deter regulator effectiveness at protecting constituents from potential safety issues. The net result is that most regulations do not actually address the core safety issues related to on-road testing of this immature technology, in large part because they aren’t really sure how to do that.

For road testing safety purposes, regulators should focus on both the operational concept and technology maturity of the vehicle being operated rather than on what might eventually be built as a product. In other words “design intent” isn’t relevant to the risk being presented to road users when a test vehicle veers into opposing traffic. Avoiding crashes is the goal, not parsing overly-complex engineering taxonomies.

The solution is to reject SAE J3016 levels as a basis for regulation, instead favoring other industry standards that are actually intended to be relevant to safety. (Again, using J3016 for terminology is OK if the terms are relevant, but not the level definitions.)

Four Regulatory Categories

We propose four regulatory categories, with details to follow:

Non-automated vehicles: These are vehicles that DO NOT control steering on a sustained basis in any operational mode. They might have adaptive speed control, automatic emergency braking, and active safety features that temporarily control steering (e.g., an emergency swerve around obstacles capability, or bumping the steering wheel at lane boundaries to alert the driver).

Low automation vehicles: These are vehicles with automation that CAN control steering on a sustained basis (and, in practice, also vehicle speed). They are vehicles that ordinary drivers can operate safely and intuitively along the lines of a “cruise control” system that performs lane keeping in addition to speed control. In particular, they have these characteristics:Can be driven with acceptable safety by an ordinary licensed driver with no special training beyond that required for a non-automated version of the same vehicle type.Includes an effective driver monitoring system (DMS) to ensure adequate driver alertness despite inevitable automation complacencyDeters reasonably foreseeable misuse and abuse, especially with regard to DMS and its operational design domain (ODD)Safety-relevant behavioral inadequacies consist of omissive behaviors rather than actively dangerous behaviorSafety-relevant issues are both intuitively understood and readily mitigated by driver intervention with conventional vehicle controls (steering wheel, brake pedal)Automation is not capable of executing turns at intersections.Field data monitoring indicates that vehicles remain at least as safe as non-automated vehicles that incorporate comparable active safety features over the vehicle life.Highly automated vehicles: These are vehicles in which a human driver has no responsibility for safe driving. If any person inside the vehicle (or a tele-operator) can be blamed for a driving mishap, it is not a highly automated vehicle. Put simply, it’s safe for anyone to go to sleep in these vehicles (including no requirement for a continuous remote safety driver) when in automated operation.Automation test platforms: These are vehicles that have automated steering capability and have a person responsible for driving safety, but do meet one or more of the listed requirements for low automation vehicles. In practical terms, such vehicles tend to be test platforms for capabilities that might someday be highly automated vehicles, but require a human test driver — either in vehicle or remote — for operational safety.

Non-automated vehicles can be subject to regulatory requirements for conventional vehicles, and correspond to SAE Levels 0 and 1. We discuss each of the remaining three categories in turn.

Low automation vehicles

The idea of the low automation vehicle is that it is a tame enough version of automation that any licensed driver should be able to handle it. Think of it as “cruise control” that works for both steering and speed. It keeps the car moving down the road, but is quite stupid about what is going on around the car. DMS and ODD enforcement along with mitigation of misuse and abuse are required for operational safety. Required driver training should be no more than trivial familiarization with controls that one would expect, for example, during a car rental transaction at an airport rental lot.

Safety relevant issues should be omissive (vehicle fails to do something) rather than errors of commission (vehicle does the wrong thing). For example, a vehicle might gradually drift out of lane while warning the driver it has lost lane lock, but it should not aggressively turn across a centerline into oncoming traffic. With very low capability automation this should be straightforward (although still technically challenging), because the vehicle isn’t trying to do more than drive within its lane. As capabilities increase, this becomes more difficult to design, but dealing with that is up to the companies who want to increase capabilities. We draw a hard line at capability to execute turns at intersections, which is clearly an attempt at high automation capabilities, and is well beyond the spirit of a “cruise control” type system.

An important principle is that human drivers of a production low automation vehicle should not serve as Moral Crumple Zones by being asked to perform beyond civilian driver capabilities to compensate for system shortcomings and work-in-progress system defects. If human drivers are being blamed for failure to compensate for behavior that would be considered defective in a non-automated vehicle (such attempting to turning across opposing traffic for no reason), this is a sign that the vehicle is really a test platform in disguise.

Low automation vehicles could be regulated by holding the vehicles accountable to the same regulations as non-automated vehicles as is done today for Level 2 vehicles. However, the regulatory change would be excluding some vehicles currently called “Level 2” from this category if they don’t meet all the listed requirements. In other words, any vehicle not meeting all the listed requirements would require special regulatory handling.

Highly automated vehicles

These are highly automated vehicles for which the driver is not responsible for safety, generally corresponding to SAE Levels 4 and 5. (As a practical matter, some vehicles that are advertised as Level 3 will end up in this category in practice if they do not hold the driver accountable for crashes when automation is engaged.)

Highly automated vehicles should be regulated by requiring conformance to industry safety standards such as ISO 26262, ISO 21448, and ANSI/UL 4600. This is an approach NHTSA has already proposed, so we recommend states and municipalities simply track that topic for the time being.

There is a separate issue of how to regulate vehicle testing of these vehicles without a safety driver, but that issue is beyond the scope of this essay.

Automation test platforms

These are vehicles that need skilled test drivers or remote safety monitoring to operate safely on public roads. Operation of such vehicles should be done in accordance with SAE J3018, which covers safety driver skills and operational safety procedures, and should also be done under the oversight of a suitable Safety Management System (SMS) such as one based on the AVSC SMS guidelines.

Crashes while automation is turned on are generally attributed to a failure of the safety driver to cope with dangerous vehicle behavior, with dangerous behavior being an expectation for any test platform. (The point of a test platform is to see if there are any defects, which means defects must be expected to manifest during testing.)

In other words, with an automation test platform, safety responsibility primarily rests with the safety driver and test support team, not the automation. Test organizations should convince regulators that testing will overall present an acceptably low risk to other road users. Among other things, this will require that safety drivers be specifically trained to handle the risks of testing, which differ significantly from the risks of normal driving. For example, use of retail car customers who have had no special training per the requirements of SAE J3018 and who are conducting testing without the benefit of an appropriate SMS framework should be considered unreasonably risky.

This category covers all vehicles currently said to be Level 4/5 test vehicles, and also any other Level 2 or Level 3 vehicles that make demands on driver attention and reaction capabilities that are excessive for drivers without special tester training.

Regulating automated test platforms should concentrate on driver safety, per my State/Municipal DOT regulatory playbook. This includes specifically requiring compliance with practices in SAE J3018 and having an SMS that is at least as strong as the one discussed in the AVSC SMS guidelines.

Wrap-up

Automated vehicles regulatory data reporting at the municipal and state levels should concentrate on collecting mishap data to ensure that the driver+vehicle combination is acceptably safe. A high rate of crashes indicates that either the drivers aren’t trained well enough, or the vehicle is defective. Which way you look at it depends on whether you’re a state/municipal government or the US government, and whether the vehicle is a test platform or not. But the reality is that if drivers have trouble driving the vehicles, you need to do something to fix that situation before there is a severe injury or fatality on your watch.

The content in this essay is an informal summary of the content in Section V of: Widen, W. & Koopman, P., “Autonomous Vehicle Regulation and Trust” SSRN, Nov. 22, 2021. In case of doubt or ambiguity, that SSRN publication should be consulted for more comprehensive treatment.

—–

Philip Koopman is an associate professor at Carnegie Mellon University specializing in autonomous vehicle safety. He is on the voting committees for the industry standards mentioned. Regulators are welcome to contact him for support.